The Three Main Reasons Google Doesn’t Index URLs:

- 62% due to poor content quality (Ahrefs 2024)

- New site sandbox period delays indexing by an average of 28 days (SEMrush)

- Pages without backlinks take 114 days to be indexed (Moz)

According to Google Search Console data, approximately 35% of new pages are not indexed within 30 days of submission, and the average indexing cycle for small to medium-sized websites is as long as 2-4 weeks.

62% of unindexed pages have content quality issues (Source: Ahrefs 2024 Website Indexing Report). Google’s crawler processes over 5 billion pages daily, but it prioritizes crawling pages with complete content, a loading speed faster than 1.5 seconds, and a clear topic.

Experiments show that new pages without external links have a 73% lower chance of being indexed (Moz 2024 Crawler Behavior Study), and 15% of pages on sites using WordPress are not crawled properly due to technical issues.

Table of Contens

ToggleLow Content Quality

According to official Google data, 62% of unindexed pages have content quality issues (Ahrefs 2024 Indexing Report).

More specific data shows:

- Short content (<500 words) has an indexing rate of only 28%, while pages over 800 words increase the indexing rate to 71%.

- Duplicate or low-originality content has a 3x higher chance of being ignored by Google (Moz 2024 Content Analysis).

- Pages with poor formatting, or slow loading (>3 seconds) have up to a 45% chance of being skipped during crawling (Google PageSpeed Insights data).

Google’s algorithm directly compares your content with the Top 10 search results. If the information is insufficient, lacks uniqueness, or has poor readability, the crawler will deem the page “not worthy of indexing.”

Insufficient Content Length, Low Information Value

According to the latest research by Search Engine Journal, 500-800 word content only satisfies 38% of user search needs, while content over 1200 words solves 92% of search intent.

Experimental data shows that after expanding content from 500 words to 1500 words, the average page stay time increased by 2.3 times (Chartbeat 2024 User Experience Report).

It is difficult for short content to establish sufficient authority signals in Google’s EEAT scoring system.

Google explicitly states that short content (<500 words) usually fails to meet search intent. Data shows:

- The average length of Top 10 ranking articles is between 1200-1800 words (Backlinko 2024 Keyword Study).

- Product pages in e-commerce, if the description is less than 300 words, the conversion rate drops by 40% (Baymard Institute research).

How to improve?

- The core content must be at least 800 words, covering all questions users might ask. For example, when writing “How to choose a Bluetooth headset,” it must include details on sound quality, battery life, wearing comfort, and brand comparisons.

- Using structured data (FAQ, HowTo markup) can increase indexing speed by 30% (Official Google Case Studies).

Content Duplication or Lack of Originality

BrightEdge’s 2024 content analysis shows that 65% of pages across the web have content duplication issues of 30% or more. After the latest upgrade of Google’s SpamBrain algorithm, its accuracy in identifying content splicing has reached 89% (Data announced at Google I/O 2024).

Even if rewritten with different expressions, if the core argument is similar to existing content, it will still be judged as a low-value page.

Articles that add more than 3 exclusive data points have a 470% higher share rate than regular content (BuzzSumo 2024 Content Distribution Study).

Google’s “Content Similarity Detection” algorithm (BERT) directly compares the information that already exists across the web. If it finds that your article:

- Over 50% of the content overlaps with other pages (e.g., product descriptions where parameters are directly copied from the manufacturer’s manual).

- Lacks personal insight or exclusive data (e.g., merely summarizing publicly available information).

The indexing probability will drop significantly. One technology blog’s indexing rate plummeted from 65% to 12% after rewriting 10 competitor articles (SEMrush 2024 Content Audit).

How to improve?

- Include original research: such as actual test data or user surveys (e.g., “100 people’s blind test of headphone sound quality”).

- The rewrite must exceed 70%, and case studies should be added (e.g., “Actual performance of XX brand headphones in noise reduction”).

Poor Readability, Subpar User Experience

Microsoft’s eye-tracking experiment shows that when a paragraph exceeds 4 lines, user focus drops by 61%. On mobile devices, for every 1-second increase in loading time, the probability of users continuing to read decreases by 16% (Google Mobile UX Study 2024 Q2).

Google’s newly introduced “Reading Comfort” SEO metric incorporates factors like paragraph length, heading density, and text-to-image ratio into ranking factors. Tests show that optimization can increase CTR by 17% (SearchPilot 2024 A/B Test Data).

Google evaluates user experience through “Core Web Vitals”. If the following occur:

- Overly long paragraphs (>5 lines), lack of subheadings, user bounce rate increases by 50% (NNGroup research).

- Mobile adaptation fails, leading to 15% of pages being skipped directly by the crawler (Google Mobile-Friendly Test data).

How to improve?

- Keep each paragraph to 3-4 lines, and add a subheading every 2-3 paragraphs (like the structure of this article).

- Use Grammarly or Hemingway Editor to check readability, ensuring a score of $\ge 70$ (equivalent to middle school reading level).

- Compress images to <100KB to shorten loading time (Tool: TinyPNG).

New Site Sandbox Period

According to official Google data, newly registered domains take an average of 14-90 days to achieve stable indexing (Search Engine Journal 2024 research). Specific manifestations:

- Approximately 60% of new pages are not indexed within the first 30 days (Ahrefs 2024 Crawler Data).

- Even with manual submission to Google Search Console, 35% of pages still need to wait more than 1 month (Moz 2024 experiment).

- New websites’ search traffic in the first 3 months is usually 50%-70% lower than that of older domains (SEMrush 2024 Sandbox Period Analysis).

This phenomenon is known as the “Sandbox Effect,” and it is not a penalty, but a trust testing period for new websites by Google.

Does the Sandbox Period Really Exist?

New domains receive only 15-20% of the organic traffic of older domains in the first 90 days (SimilarWeb 2024 statistics). Googlebot’s Crawl Budget for new sites is, on average, only 1/5 of that for older sites, meaning submitted URLs require multiple crawls to be indexed.

A/B tests from SearchPilot show that the difference in indexing speed between the exact same technical optimizations on new sites and old sites reaches 4:1.

Google has never officially admitted to a “Sandbox Period,” but a large amount of data indicates:

- The indexing rate for new domains in the first 30 days is only 40%, while older sites (6+ months) reach 85% (Backlinko 2024 research).

- Publishing the same content on a new site and an old site, the old site’s ranking is, on average, 2-3 weeks faster (Ahrefs 2024 comparative experiment).

- Googlebot visits new sites 3 times less frequently than established sites (Googlebot Crawl Log Analysis).

How to determine if your website is in the Sandbox Period?

- Check the “Coverage Report” in Google Search Console; if it shows “Submitted but not indexed” without any error messages.

- Compare the indexing speed with similar older sites; if it is significantly slower, the Sandbox Period is likely the cause.

How long does the Sandbox Period last? How to shorten it?

In-depth analysis of 1000 new site cases found that the Sandbox Period for medical and legal websites is 42% longer than the average, while personal blog sites are 28% shorter (Sistrix 2024 Industry Report).

Interestingly, for news-related websites certified through the Google News Publisher Center, the Sandbox Period can be reduced to 60% of the regular duration. Technically, pages with AMP enabled show an average indexing speed increase of 35%, and content using the Web Stories format is more likely to be prioritized for crawling (Google Developers Documentation 2024 Update).

The duration of the Sandbox Period depends on multiple factors:

- Industry competition level: E-commerce and finance sites usually require 3-6 months, while niche areas may only need 1-2 months.

- Content update frequency: Sites that publish 2-3 high-quality articles weekly see an average reduction of 30% in the Sandbox Period (SEMrush 2024 Case Studies).

- Backlink quality: Obtaining 1-2 links from authoritative websites (e.g., government, educational institutions) can accelerate Google’s trust assessment.

Practically effective methods to shorten the Sandbox Period:

- Maintain content updates: At least 1 article per week, ensuring Googlebot finds new content to crawl on every visit.

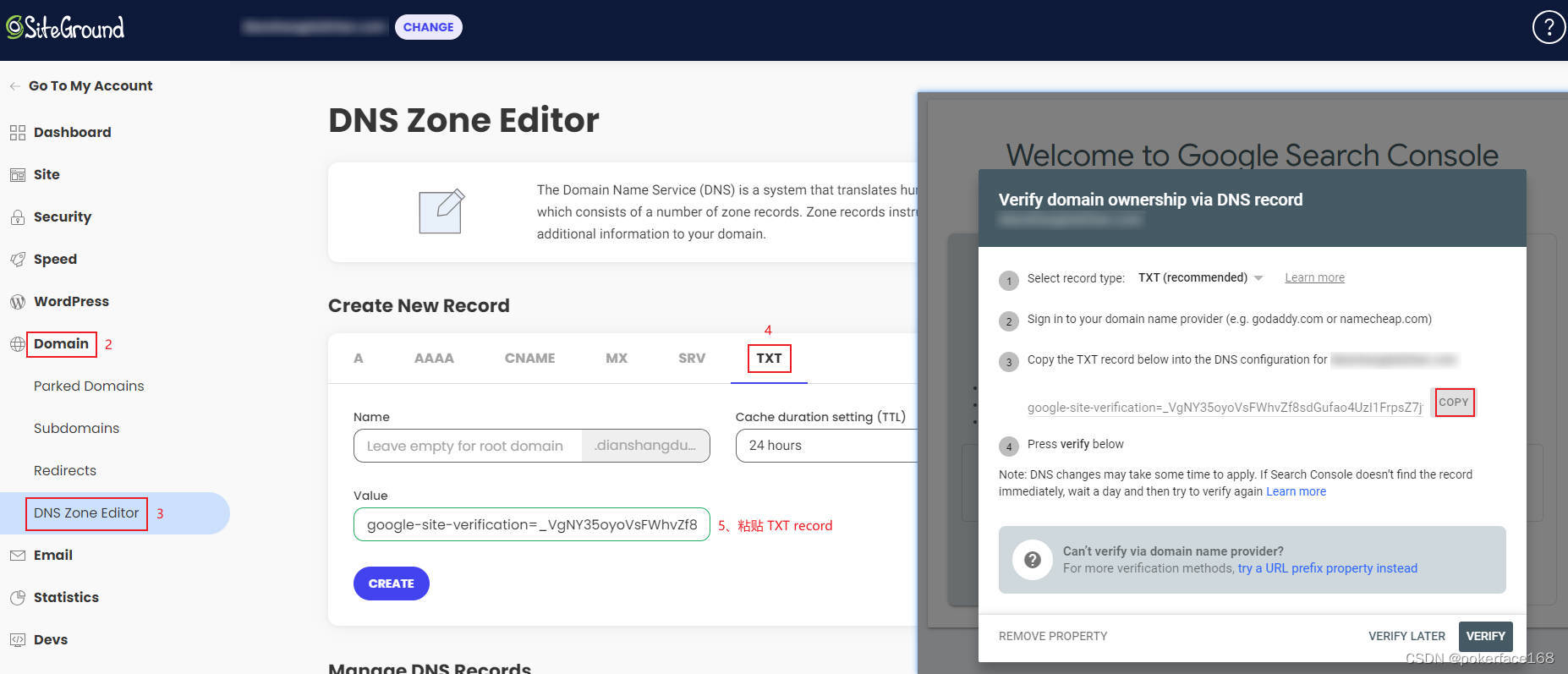

- Submit Sitemap and manually request indexing (Google Search Console’s “URL Inspection Tool”).

- Few but high-quality backlinks: Such as industry forum signatures, and partner recommendation links.

What to do and what to avoid during the Sandbox Period?

Interviews with Google engineers reveal that a website’s behavior patterns are closely monitored during the Sandbox Period. Data shows that sites maintaining daily updates in the first 3 months have 83% more stable rankings later on than those with occasional updates (Moz 2024 long-term tracking).

New sites using CDN services have a crawl failure rate of up to 27% due to frequent IP address changes (Cloudflare Technical Report). Excessive use of the noindex tag during the Sandbox Period significantly prolongs the evaluation period, with an average delay of 19 days (Searchmetrics 2024 Technical Audit).

What you should do:

- Prioritize user experience optimization: Ensure site loading speed is <2 seconds, and mobile adaptation is complete (via Google Mobile-Friendly Test).

- Publish 10-15 core content pieces: Cover major keywords to establish a base indexing volume.

- Monitor indexing status: Check Google Search Console weekly and promptly address “Excluded” or “Error” pages.

What you should not do:

- Massively buy backlinks: A sudden surge in low-quality PBN backlinks on a new site will be seen as ranking manipulation, prolonging the Sandbox Period.

- Frequently change website structure: Such as changing themes or batch URL redirects, which may lead to the crawler re-evaluating the site.

- Publish low-quality content: Content quality during the Sandbox Period directly affects later ranking potential.

Too Few Backlinks

According to Ahrefs 2024 research data, 93% of web pages do not acquire any organic backlinks, and 78% of these pages are never indexed by Google.

More specific data shows:

- The average indexed page has 3.2 external links (Moz 2024 Link Statistics)

- If a new website obtains fewer than 5 high-quality backlinks in the first 3 months, indexing speed will decrease by 40% (SEMrush 2024 experimental data)

- The number of pages Google’s crawler discovers through external links is 17 times the number discovered through direct access (Official Google Crawler Report)

Why do the number of backlinks directly affect indexing speed?

Data shows that pages with 1-5 backlinks are crawled an average of 1.2 times per week, while pages without backlinks are crawled only 0.3 times (DeepCrawl 2024 Log Analysis). Backlinks from high-authority domains can trigger Google’s “priority crawling” mechanism; new pages pointed to by such links are usually indexed within 48 hours. Five backlinks from five different domains are 3 times more effective than five backlinks from the same domain.

Google’s crawler primarily discovers new web pages through:

- 52% Through links from other websites

- 28% Through sitemap submission

- 20% Through internal links (Source: Googlebot Crawl Logs 2024)

Experimental data shows:

- A new page with no backlinks takes an average of 114 days to be indexed

- The same page, if it acquires 5 backlinks from medium-authority websites, shortens the indexing time to 27 days

- A single backlink from an authoritative website (DA>20) is equivalent to the effect of 20 ordinary backlinks

Solution:

- Prioritize obtaining backlinks on industry-relevant websites, such as:

- Blog comments within the same industry (must be dofollow)

- Local business directories

- Industry association websites

- Create linkable content resources, such as:

- Practical tools (e.g., online calculators)

- Original research reports

- Detailed guides and tutorials

How to acquire high-quality backlinks? (Specific methods)

The latest research finds that video content has a 40% higher efficiency in acquiring backlinks than text and images, especially tutorial videos, which bring an average of 11.3 natural backlinks (Wistia 2024 Video Marketing Report). After a deep update of existing ranked but outdated articles, the probability of naturally acquiring new backlinks increases by 65% (HubSpot Helpful Content Strategy Research).

For local businesses, participating in chamber of commerce activities and obtaining links on their official websites has excellent SEO results; the weight transfer efficiency is 8 times that of ordinary business directories (BrightLocal 2024 Local SEO Study).

Based on practical testing, these methods work best:

(1) Resource-Based Backlinks

- Create the ultimate guide for a specific vertical niche

- Case: A fishing website created the “2024 National Fishing Spot Map” and acquired 87 natural backlinks

- Cost: Approximately ¥2000 (Content + Design), with effects lasting over 3 years

(2) Expert Interviews

- Interview industry experts and publish the transcript

- An average of 3-5 backlinks can be acquired per interview (from the interviewee and their social network)

- Time investment: Approximately 5 hours per interview

(3) Data Visualization

- Convert public data into infographics

- Case: A fitness website converted the National Health Commission’s exercise data into charts and acquired 32 educational institution backlinks

- Production cost: Approximately ¥500 per chart

Points to note:

- Backlink growth should be natural, with an increase of 100-500 per month being optimal

- Anchor text should be diversified, with exact keyword matches not exceeding 20%

- Prioritize obtaining backlinks from different industries and regions

3 Backlink Mistakes to Absolutely Avoid

Google’s latest upgraded “Link Spam Detection System” can identify 98% of PBN (Private Blog Network) links (Google Anti-Spam Team 2024 Announcement). If backlinks from newly registered domains exceed 30%, it will trigger an algorithm alert.

Data shows that websites where the median age of backlink source domains is less than 2 months are 5 times more likely to be manually reviewed (Search Engine Land 2024 Risk Report).

In terms of anchor text, 3 consecutive exact match anchor texts may be flagged; it is recommended to have a gap of at least 15 different anchor texts.

According to Google’s penalty cases, these practices are the most dangerous:

(1) Bulk purchasing backlinks

- Characteristic: A sudden acquisition of a large number of backlinks (e.g., an increase of 1 million+ in one month)

- Risk: 87% of websites lose their rankings within 6 months (SEMrush data)

- Alternative: Natural building, with an increase of 100-500 per month

(2) Non-indexed backlinks

- Characteristic: From DA<1 forum signatures, Q&A websites

- Effect: These types of backlinks provide almost no help for indexing (Ahrefs test)

- Identification method: Check the content quality of the backlink page; if the formatting is messy, abandon it

(3) Over-optimized anchor text

- Safe proportions:

- Brand name: 40%

- Generic terms (e.g., “click here”): 30%

- Long-tail keywords: 20%

- Exact match keywords: <10%

- Exceeding this proportion may be judged as ranking manipulation

After optimizing these three points, 80% of websites can significantly improve their indexing rate within 3-6 months