According to many SEO tool providers and industry observers, about 30% of websites experience this kind of “pain period” after major content updates, with core keyword rankings commonly dropping by 5-10 positions on average.

Seeing your rankings drop can be stressful, but this is often Google reevaluating your pages.

It might be that during content adjustments you unintentionally drifted away from what users really want to find (for example, missing the search intent), or you just happened to hit a Google algorithm update that caused fluctuations.

Next, we’ll analyze the 4 most common and urgent reasons to check first.

Table of Contens

ToggleTechnical issues or configuration errors affecting proper crawling and indexing

According to aggregated anonymous site data from some SEO service providers, in cases where rankings dropped due to updates, over 35% were ultimately traced back to technical or configuration errors.

Even more alarming, Google Search Console (GSC) data shows that over 25% of sites have long-standing indexing problems caused by technical errors in their coverage reports.

Indexing is mysteriously “actively blocked”: telling Google “Don’t crawl me!”

Real scenarios:

- CMS plugin/theme update traps: Updated your WordPress theme? Or changed your site framework (like React/Vue rendering logic)? Some plugins or theme settings might quietly add a

tag by default or during update. It’s like hanging a “Closed for business” sign at your newly renovated store entrance for Google to see. - Temporary measures forgotten to remove: Originally used

noindexto keep Google from indexing a test page or old version, but forgot to remove it after officially updating the content. robots.txtmisconfiguration: A global or directory-levelrobots.txtfile contains aDisallow: /(block all crawlers) orDisallow: /important-updated-page/(block specific updated directory) with wrong paths, causing the entire update area to be un-crawlable. Stats show that 15% of indexing problems in GSC coverage reports are caused by robots.txt blocking.- XML sitemap errors: Not submitting a new sitemap after updating content, or the new sitemap forgot to include important updated pages, or the sitemap itself has formatting errors or dead links. Google might be slower or even miss these updates.

How to accurately troubleshoot (step-by-step):

- GSC is your detective tool: Go straight to the point. Open your Google Search Console:

- “Index” > “Coverage” report: Focus on the “Excluded” tab. Carefully filter for pages “excluded due to

noindexdirective” and “blocked by robots.txt.” Over 25% of sites find indexing issues here they didn’t realize before. Is your updated page listed? - “URL Inspection” tool: Enter the URL of your updated page. Check three key points: Is the “Index Status” marked as “Indexed”? Does it say “Blocked by

noindextag”? Does it say “Blocked by robots.txt”? This is the most direct diagnostic report.

- “Index” > “Coverage” report: Focus on the “Excluded” tab. Carefully filter for pages “excluded due to

- Check the page HTML source code: Open your updated page in a browser, press

Ctrl+U(Windows) orCmd+Option+U(Mac) to view the source. Search forornoindex, and see if there’s acontent="noindex"present. - Check the

robots.txtfile: Visityoursite.com/robots.txtin your browser and read the contents. Confirm if anyDisallow:rules accidentally block your updated page paths. Use online tools to test its syntax. - Confirm sitemap submission and content: In GSC under “Index” > “Sitemaps,” check submission status. Download your submitted sitemap file and verify if it includes URLs of your updated pages. Use an XML validator to check the structure.

URL Changes and 301 Redirect Issues

Real Pain Points:

- Changed URL without setting up redirects: This is the most direct and fatal problem! For example, changing

/old-page/to/new-page/but not setting up any redirect. Users clicking the old link get a404(page not found). Google crawlers hit a dead end, and the old page’s ranking authority instantly drops to zero. The new page might not even be crawled or have any ranking yet. - Incorrect 301 redirect setup:

- Chained redirects:

/old-page/→/temp-page/→/new-page/. Each redirect wastes some link “juice,” lowering efficiency and increasing chances of errors. - Redirecting to the wrong target: Accidentally pointing to an unrelated page.

- Missing redirects for URLs with parameters: The old page might have multiple versions like

/old-page/?source=facebook. If only/old-page/is redirected, those parameterized links still return 404. - Redirects not working or returning wrong status codes: Server misconfiguration causing redirects to return

302(temporary),404, or even500errors.

- Chained redirects:

- Loss of “link equity” from external backlinks: All those valuable backlinks pointing to your old page lose their “vote” power because of faulty redirects. This is like throwing away precious SEO legacy. Studies show that effective 301 redirects can pass over 90% of link equity from old pages to new pages, while wrong or missing redirects drastically reduce or nullify that value.

How to accurately troubleshoot:

- Simulate visiting “all” old URLs: Identify old URLs that used to rank (check GSC “Performance Report” history or memory). Manually visit them in a private/incognito browser window:

- Do they instantly (status 301 or 308) redirect to the correct new URL?

- Or do they show a 404 error?

- Or redirect to a wrong or unrelated page?

- Check link equity transfer (external backlink audit):

- Use link analysis tools (Ahrefs, SEMrush free version works) to check backlinks to old pages.

- Visit some important backlink sources and see if they link to your old URL.

- Access that old URL and confirm if it redirects via 301/308 to the new page. Does the tool show the new page inheriting (part of) the old page’s link equity?

- Use GSC “Performance Report” comparison: Compare before-and-after periods to see impressions and clicks changes for old and new URLs. Does the old URL drop quickly to zero? Does the new page pick up traffic? Poor transfer might signal redirect issues.

- Server log analysis (advanced): Check your server logs for requests to old URLs and see what HTTP status codes Googlebot receives—301, 404, or others?

Server Errors and Page Performance Degradation

Performance issues exposed after updates:

- Sudden server errors: After updates, server load spikes, code conflicts, or config errors cause crawlers to frequently encounter:

5xxserver errors (especially503 Service Unavailable: server temporarily overloaded or under maintenance)4xxclient errors (e.g.,403 Forbidden: permission issues)- Brief but frequent downtime leads Google to reduce crawl rate or temporarily ignore pages.

- Page load speed significantly slower (especially on mobile):

- New bulky plugins/scripts, unoptimized huge images, or complex rendering slow down loading.

- Google’s page speed metrics (like LCP, FID, CLS) get worse: Core Web Vitals are explicit Google ranking factors. Data shows that when mobile page load time rises from 1 second to 3 seconds, bounce rate jumps 32%. Googlebot doesn’t “bounce,” but it logs loading efficiency; slow pages get less crawl budget and worse ranking evaluation.

- Failed resource loading (render-blocking): Newly referenced JavaScript or CSS/font files time out or fail to load, causing the page to “break” or render incompletely, so Google only gets partial content.

How to Accurately Troubleshoot:

- GSC Alerts and Inspection Tools:

- "Coverage" Report: Check if any pages are excluded from indexing due to server errors (5xx) or access denied (4xx).

- "URL Inspection" Tool: Test your updated pages directly. The report clearly tells you if there were any errors during crawling (status codes) and shows the rendered HTML (compare what Google actually sees vs. what the browser shows—are any key parts missing?).

- Speed Testing is a Must:

- Google PageSpeed Insights: Enter the URL of your updated page. Focus especially on the mobile score and diagnostic report. It will highlight specific slow factors (large images, render-blocking JS/CSS, slow server response, etc.). Compare the report before and after your update.

- Web.dev Measure or Lighthouse (Chrome DevTools): Also provide detailed speed diagnostics.

- Mobile-Friendliness Testing: GSC has dedicated tools for this. After updating, does your page layout and functionality work properly in a simulated mobile environment?

Worsened Compatibility Issues After Update

- Real Pitfalls:

- Ignoring "Mobile-First Indexing": Google primarily indexes and ranks based on mobile version content now. When updating desktop content, if you also change responsive layout logic, the mobile version might:

- Have key content hidden (e.g., via CSS

display: none). - Have a messy layout (text overlap, image overflow, unclickable buttons).

- Load too many unnecessary mobile resources.

- Have key content hidden (e.g., via CSS

- Plugin/Component Compatibility Issues: New sliders, forms, or interactive buttons might not display or work correctly on mobile.

- Poor Touch Experience: Buttons too small or spaced too tightly, leading to misclicks.

- Ignoring "Mobile-First Indexing": Google primarily indexes and ranks based on mobile version content now. When updating desktop content, if you also change responsive layout logic, the mobile version might:

- How to Accurately Troubleshoot:

- Google’s Official Mobile-Friendly Test Tool: Enter your URL to test. The report will tell you exactly what's wrong (text too small? viewport not set properly? links not clickable?).

- Real Device Testing: Use your phone and tablet (different sizes) to open the updated pages and interact manually. This is the most direct experience.

- Check GSC’s “Mobile Usability” Report: See if your updated pages have new mobile usability issues.

- Core Web Vitals (especially CLS - Cumulative Layout Shift): PageSpeed Insights reports mobile CLS values. High CLS means page elements jump around a lot during browsing, leading to poor experience. Google requires CLS < 0.1 to be considered "good". Did CLS get worse after your update?

What to Do After Finding Technical Issues?

Use the above methods to precisely locate the exact problem (Is it noindex? robots.txt? Wrong 301 redirects? Server crash?)

Fix the issues: Remove wrong tags, correct robots.txt, set up 301 redirects properly, optimize code/images/server to fix speed issues, fix mobile layout, etc.

Use GSC tools to explicitly notify Google:

- After fixing indexing issues, request “validation” or “reindexing” via the URL Inspection tool.

- After updating robots.txt or fixing server errors, wait for Google to recrawl (may take a few days).

- Submit the updated sitemap.

Be patient for recrawling and reassessment: After fixes, Google needs time to revisit the pages, process new info, and reevaluate rankings. Usually, you’ll see positive changes in days to weeks (depending on issue severity and site authority).

Content Quality Drop or Mismatch with User Search Intent After Update

You updated content, checked technicals, all good — but rankings still drop? Time to carefully review your content itself.

Updating doesn’t always mean better. Sometimes it can actually make things worse.

Don’t be stubborn—Ahrefs analysis of tons of update cases shows about 40% of ranking drops after updates directly link to content quality issues or misaligned search intent.

Google’s ranking core is always who best satisfies the real user need behind the search.

Refocus on "User Search Intent"

Why go off track?

- "I think users need this!": The biggest trap! Updates are often based on personal experience or internal company goals, not real user search data or feedback. You think explaining principles in detail matters, but users just want a quick 5-minute fix.

- Ignoring SERPs “traffic lights”: Not seriously analyzing the top 10 Google results for your target keywords before updating. What do they look like? What problems do they solve? Are they info blogs, how-to guides, product comparisons, or transactional pages? According to Ahrefs’ study of 2 million keywords, content matching specific search intent is 3x more likely to rank top 3.

- Too shallow keyword understanding: Only looking at the core keyword literally, without digging into long-tail variants and deeper problems. For example, searching “best running shoes” might mean “first pair for beginners,” “shoes for heavy people losing weight,” or “professional marathon racing shoes.” Different intents need very different content.

How to Precisely “Calibrate” Intent?

- Deep SERP Reconnaissance: Enter your core target keywords (for example, the keywords you want to optimize for the page you're updating) one by one into Google Search (preferably in incognito mode).

- What types of pages rank at the top? Lists? Q&A? Ultimate guides? Are videos taking the top spot? This directly tells you what kind of content Google thinks best satisfies the intent.

- “People also ask” and “Related searches” are goldmines! These are extensions of real user needs. Does your content cover these questions?

- Carefully read the top-ranking snippets and titles: What core info and keywords are they emphasizing? This is Google’s distilled “intent essence.”

- GSC Search Query Report is the Golden Key: Find the real search terms that have brought traffic to your updated page (and its previous versions) in the GSC “Performance Report.”

- What words did users use to find this page before? The top-ranking queries reflect what intent the content matched at that time.

- After the update, have these queries’ rankings and click-through rates changed significantly? A drop likely means the new content no longer matches those intents.

- Are there new, relevant queries showing up in the report? Maybe your update unintentionally catered to new intents that aren’t your target.

- Analyze User Engagement & Bounce (GA4):

- Compare before and after updates the average time on page and bounce rate. If bounce rate jumps more than 10% and time on page drops sharply, it strongly suggests users aren’t finding what they want.

- Break down traffic by core search terms to see which keywords bring the most dissatisfied users (i.e., the biggest intent mismatch).

What Value Did You Lose?

Common “Degradation” Actions:

- Removing high-value “unique info” from old content: For example, exclusive case study data, deep expert insights, especially practical tool recommendations, or free template downloads. These are often key to shares and good reviews.

- Diluting core keyword relevance: Trying to cover a broader range or adding new topics by forcing in a lot of less relevant peripheral info, which weakens the focus on the main topic. If Semrush keyword position tracking shows your core keywords dropping by more than 15 positions, that's a red flag.

- “Fluff” updates: Adding lots of filler, repetitive, or off-topic content just to meet a word count goal. This buries core info and reduces user reading efficiency.

- Structural chaos: Poor use of headings (H2, H3), or after updates the logic jumps around, paragraphs get too long, key info isn't highlighted (bold/emphasized enough), and clear lists or tables are missing.

How to Conduct a “Value Point Audit”?

- Open new and old versions side by side: If no archive is saved, try to recover old versions using search engine cache (like with

cache:yourpage.com/old-pagecommand), historical snapshot tools (like Wayback Machine), or check your site database/article draft history. - Mark up and compare paragraph by paragraph (or even sentence by sentence):

- Are the core questions still answered? The 1-3 key questions users care about—does the new content answer them clearly and better?

- Where did key data and insights go? Are unique data sources, cited studies, or specific tool recommendations removed or replaced with meaningless info?

- Core keyword coverage: Frequency of naturally mentioning core keywords and their synonyms in the old content? Has the new content significantly reduced or awkwardly stuffed them? Use keyword density tools to compare (but prioritize natural flow over numbers).

- Are the “actionable value points” weakened? For how-to content: are clear steps simplified into vague descriptions? Removed code snippets/screenshots? Did old “Download Now” buttons disappear?

- Structure clarity comparison: Was the old version easier to skim? Are new titles clear enough? Is info organized smoothly? Are key points highlighted?

- Check internal linking strategy changes: Did you remove important internal links during updates? Or add links pointing to irrelevant or low-quality pages? This can weaken the authority signals of your page.

Does the New Content Really Deliver “Practical Use”?

Readability and User Experience (UX) Decline:

- Language too complex or jargon-heavy: Overuse of industry terms and complicated sentence structures, raising the reading barrier. Readability tools show a Flesch Reading Ease score below 60 (out of 100), meaning content might be hard for average readers.

- Key info “hide and seek”: Users can’t see core answers or summaries above the fold and have to scroll a long way to find them.

- Lack of necessary guidance: Especially for complex processes, no clear step breakdown, callout boxes, or progress indicators, leaving users confused.

- Design or layout distractions: Too many ads, irrelevant pop-ups, poor font/color contrast causing reading fatigue.

How to effectively conduct “usability stress testing”?

- Show it to strangers (real beginners): Find a friend or colleague who doesn’t know your field, give them the core search phrase you want to optimize (like “how to change car oil”), then have them look at the updated page. Ask them to complete the task and record:

- How long did it take them to find the core answer? (Within 30 seconds is key).

- Were they able to smoothly understand the steps? Where did they get stuck? (e.g., “I don’t understand this term,” “This part doesn’t explain what tools I need,” “The picture isn’t clear.”)

- What’s their overall impression after browsing? Confused? Satisfied? Or felt like their time was wasted?

- Enable “reading mode” or print test: Turn on the browser’s reading mode, or just print the page. This helps you strip away flashy design and see if the plain text content is logically coherent and direct enough. Any fluff or distractions will stand out here.

- Use readability evaluation tools:

- Hemingway Editor (free online): Highlights complex sentences, passive voice, and hard-to-understand adverbs to help you simplify language. Aim for Grade 8 or below (roughly middle school level) for broader accessibility.

- Grammarly (free version): Checks basic grammar mistakes and clarity.

- Optimize content structure:

- Always use clear H2/H3 subheadings that accurately summarize each section’s main point.

- Bold core conclusions, key steps, and important warnings.

- Use bullet lists first for parallel info (like product feature comparisons or step lists).

- Use tables for complex processes or data comparisons.

- Add highlight boxes where appropriate (like “Important Tip: …”, “What you need: …”).

- Add clear, instructive screenshots or diagrams near key points (like before/after steps).

What to do if you find content intent mismatch?

Identify the root cause: Did you confirm the issue via SERP analysis, data comparison, or user testing? Was it a misunderstanding of user intent? Or did you miss the core value point?

Make strategic tweaks instead of blindly rolling back: Once you find the problem, make targeted additions, deletions, or reorganizations based on the current update:

- Intent deviation: Add content users really care about (like “People Also Ask” questions from SERP), and remove off-topic sections.

- Value point loss: Re-add unique valuable data sources, tool links, or deep insights that were mistakenly removed.

- Diluted relevance: Simplify or remove non-core content, strengthen discussion focused on the main keyword.

- Structure problems: Rebuild paragraph logic, use clearer subheadings, lists, tables, visual elements, and bold emphasis.

Small-scale testing and iteration: If possible, for important pages, first roll out the revised version on a test environment or a copy page, then monitor short-term behavior data (like GSC click-through rates, GA session/ bounce rates).

Resubmit for indexing: After finishing updates, use GSC’s “Request Indexing” on the URL to notify Google that the content is optimized.

Keep monitoring user feedback and ranking fluctuations: After changes, keep watching GSC search term reports and GA user behavior metrics to see if things improve.

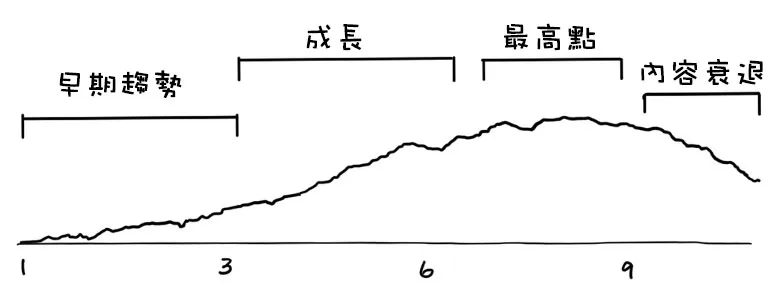

Happened to catch a Google algorithm update

Industry tracking by Search Engine Land shows Google does 5-7 confirmed core algorithm updates yearly, plus hundreds of minor tweaks.

If your update happens to land right during one of these update windows (especially those lasting weeks and impacting many sites), ranking fluctuations are likely more about the overall algorithm shakeup.

About 35% experienced unexpected big ranking drops. This is mostly because the algorithm is redefining “quality” standards, causing different sites’ strengths to rise and fall.

Confirm algorithm dynamics

Why shouldn’t you only focus on your own ranking drop?

- The algorithm is essentially a big reshuffle. While you’re dropping, others (maybe your competitors you dislike) might be rising. SEMrush’s Sensor tool tracking industry volatility shows that during major core updates, over 60% of sites experience big ranking swings (over 10 positions). Watching only your own corner can lead to wrong conclusions.

- Minor updates and core updates have totally different impacts. A small fix targeting spam comments is not the same as a core update redefining “high-quality content.”

How to quickly and reliably catch the “storm” signals?

- Keep a close eye on the “source triangle”:

- Google’s official “X” (formerly Twitter) accounts (@GoogleSearchLiaison / @searchliaison): This is the most authoritative update announcement channel, especially for announcing core updates. They often say things like: “Core update rollout starts tomorrow and will take weeks.” Pay attention to wording: “Core Update” vs “Improvements” or “Spam update”? Core updates have the biggest impact.

- Top industry news sites: Search Engine Land and Search Engine Journal update quickly and have special pages tracking update impacts (like SEL’s “Google Algorithm Update History”). They gather industry feedback fast and confirm the update scope.

- Trusted SEO KOLs: Experts like Barry Schwartz (@rustybrick), Marie Haynes, Glenn Gabe (@glenngabe) share firsthand observations and analysis on X. Their insights are valuable but remember to cross-check and avoid following any single voice blindly.

- SEMrush Sensor / MozCast / Algoroo / Accuranker Grump: These tools can show the overall “churn” index of search engine results pages (SERPs) in real-time (or daily updates). When the Sensor or MozCast index spikes to 8/9/10 (the higher the number, the more turbulent) and stays high for several days, it’s very likely a major update has happened.

- List your 3-5 main competitors. Use rank tracking tools (like SEMrush Position Tracking, Ahrefs Rank Tracker) or manually check some core keywords to see their ranking trends:

- Are they all dropping together? All rising? Or only you and a few others falling?

- Pay special attention to those competitors whose content update direction is similar and domain authority is close to yours. If they’re all dropping, it’s likely an algorithm shift; if they’re stable or rising but you’re dropping, the issue is more likely with your update.

- Moz surveys show that during algorithm updates, about 70% of SEOs prioritize analyzing competitor performance to figure out their own problem’s nature.

Who does this update “favor” or “penalize”?

Can Google’s “mystery” be decoded?

- Google’s core update announcements are usually vague: “aimed at rewarding high-quality content,” but they rarely specify exactly what “high quality” means. Still, there are clues:

- Read carefully between the lines of official announcements: Even brief lines sometimes hint at key points, like “better evaluating page experience,” “understanding content expertise,” etc. Combine this with recent Google updates to their Search Quality Evaluator Guidelines (public documents).

- Industry deep-dive reports are goldmines: Big SEO agencies (like Moz, Search Engine Land, Backlinko, Semrush) publish detailed analysis after the update settles down. After analyzing thousands of changes, they often summarize common traits:

- After the last October 2023 core update, many analyses pointed to more focus on user experience (especially page load speed and interactivity – Core Web Vitals) and deep, intent-satisfying content.

- Previous updates emphasized cracking down on content farms (aggregator sites), low-quality AI-generated content (not meeting EEAT), overly commercial affiliate pages (low user value).

- “Survivor” commonalities: Look at sites in your niche/industry whose rankings surged. What do their contents have in common? Super long articles? More authoritative references? Stronger expert endorsements? Very fast page speed? Better visual presentation? Sistrix case studies often reveal these “winner” patterns.

- Beware if your “site type” is a high-risk area:

- YMYL sites (health, money, legal advice, etc.): These are always sensitive during updates because Google’s requirements for EEAT (Experience, Expertise, Authority, Trustworthiness) are extremely high. Marie Haynes’ research shows that in EEAT-related core updates, YMYL sites are more than twice as likely to be negatively affected compared to other sites.

- E-commerce aggregator / affiliate sites: Content tends to be commercial and shallow, making it easier to be flagged as low value by the algorithm.

- Sites relying on massive low-quality content or over-optimization: These are prime targets for the algorithm crackdown.

Should you act now to fix things, or lie low?

During major core update periods (weeks of volatility) — the best strategy is to “stay calm”:

- A core update is a “re-evaluation,” not a “final judgment.” Google needs time to reprocess all content and produce new rankings. The official advice is usually to wait 1-2 weeks for the update to complete and rankings to stabilize before judging the impact. What you see now may just be chaos.

- Don’t make “big moves” like site redesigns now: Changing site content or structure a lot during this shaky period is like dancing on a rocking boat — you risk falling harder. You won’t be able to tell if your changes are right because the environment is still unstable.

- What to do? Focus on “monitoring” and “analyzing”:

- Keep tracking the industry trends and tool data (Sensor indexes) mentioned above.

- Closely watch your core keyword ranking trajectories in GSC and rank tracking tools (especially in the 1-2 weeks after the update finishes). Are rankings slowly recovering? Stabilizing at the bottom? Or continuing to fall?

- Deeply analyze GSC search performance reports: Which keywords dropped? Which remained stable or slightly rose? These keywords and their search intent offer valuable clues.

- Record all valuable info you observe (possible algorithm tendencies).

If rankings still drop sharply after the update stabilizes

Based on the above analysis (direction + your own data), pinpoint the issue:

- If your page is considered lacking in EEAT (especially YMYL): consider adding author authority introductions, citing or collaborating with authoritative institutions, adding case studies/real experience sharing, and strengthening citations with high-quality reference links.

- If the analysis points to user experience (Core Web Vitals) issues: fully optimize page speed (LCP, FID, CLS), smooth interactions, and mobile adaptation.

- If content intent relevance is reassessed by the algorithm as insufficient: go back to the previous article’s content optimization principles, calibrate based on SERP and user data.

- If content depth or uniqueness is lacking: refer to top-ranking competitor content, add more valuable in-depth analysis and unique insights.

Make targeted and evidence-based improvements (avoid blind trial and error): Based on the pinpointed issue, make targeted content or technical adjustments. Don’t just start over from scratch.

Keep monitoring feedback after improvements: SEO recovery is a marathon. After improving specific points, continue to monitor ranking and traffic trends.

Updates causing original link signals or user signals to be damaged

For example, “voting weight” from backlinks and “behavioral evidence” left by user visits.

Think about it: your previously well-performing content might have accumulated a lot of backlinks (also called external links) and also received good user dwell time and engagement data, but after updating, you may unintentionally:

- Cause those vote-carrying (backlink) links to “break” — for example, if you changed the page URL but messed up the redirects, old links lead to dead ends (404 errors).

- New content makes users unwilling to stay — after update, the page became complicated, hard to read, or navigation got confusing, so users skim and leave quickly (bounce rate spikes). Chartbeat data shows that if page load time goes from 1 second to 3 seconds, mobile user bounce rate increases by 32% on average.

- Internal “recommendations” got broken — internal links from other important pages were removed during the update.

Why does link equity “disappear”?

Root causes:

- No or wrong 301 redirect after URL changes: This is the worst kind of mistake. AHREFS research clearly shows a correctly set 301 redirect can pass over 90% of the old page’s link equity to the new page. Conversely:

- No 301 at all: old links lead directly to 404, users and crawlers hit a wall, and all backlink value instantly vanishes.

- 301 redirect set to the wrong place: old links redirect to unrelated pages (like homepage or category pages), so the equity can’t effectively focus and pass to the real updated target page.

- Redirect chaining:

/old-page->/temp-page->/new-page. Each redirect step causes equity loss (called “link juice loss”). PassionDigital’s case study shows a second-level redirect causes a 25%-30% drop in link equity passed. - Missing redirects for old links with parameters: Old pages might have parameter variants like

/old-page?source=email. If only/old-pagehas 301, those parameterized links still 404. - Redirect setup errors: server config issues cause requests that should return 301 to return 404 (not found) or 500 (server error).

- New content changed the “recommendation letter” from backlinks: For example, previously a high-quality original guide cited by many authoritative sites, but after updating you greatly cut core value, added tons of ads, or the topic shifted. Users coming from those authoritative backlinks find the content much worse, leave disappointed. Over time, Google perceives those backlinks as less valuable, reducing the link equity bonus. Backlink tools (like Semrush) sometimes warn about reduced anchor text relevance to the new page topic.

How to diagnose “backlink signal decay”?

- GSC Performance Report is very helpful:

- Compare before and after updates, check the old URL’s impressions and clicks trend. Ideally, after update (with 301) the old URL data should quickly drop near zero, while the new URL should inherit corresponding traffic trends. If old URL traffic drops sharply but new URL doesn’t catch it, 301 likely didn’t work or wasn’t done properly.

- Watch new page’s ranking changes along with impressions/CTR. If impressions hold or rise but CTR and rankings keep dropping, it suggests Google finds your new page irrelevant (possibly betraying backlink anchor text).

- Backlink tools “health reports”:

- Use Ahrefs (Site Explorer) or Semrush (Backlink Analytics):

- Find all backlinks pointing to the old URL. These are your precious assets.

- Sample check backlink sources: Manually visit 5-10 important referring pages, click their links to your page. Do they correctly 301/308 redirect to the new target page? Is the redirect smooth? (The simplest but most direct method.)

- Check the tools’ evaluation of the new page’s backlink profile: See if they have counted backlinks pointing to old URL as part of the new page’s backlinks (tools try to follow 301). If the new page’s backlink count is much lower than the old URL’s, it means redirect or tracking has issues (or link juice loss from chaining).

- Use Ahrefs (Site Explorer) or Semrush (Backlink Analytics):

301 / 308 (correct redirects)? Or 404 / 500 (errors)? This precisely shows what the crawler encountered.Bad User Behavior Signals

What users' “foot votes” really tell us:

- Bounce Rate Spikes: In GA4, bounce rate means the percentage of users who view just one page and leave without interacting (strictly it’s called “engagement rate,” but the issue looks the same).

- If bounce rate jumps a lot after an update (like from 40% to over 60%), it’s a strong sign: users think the page they clicked isn’t what they wanted or the content is so bad they don’t bother exploring and just leave.

- Especially watch bounce rate from organic search traffic, as this is direct feedback on search intent.

- Engaged Time Drops Sharply: GA4 tracks the real time users interact with the page (scrolling, clicking). If average engaged time drops from 3 minutes to 1.5 minutes after update, it means content appeal or usability got worse.

- Page CTR (Click Through Rate) Plummets: Check your new page URLs’ performance in the GSC “Performance Report.”

- Compared to the old page’s historical CTR at the same rank, does the new page’s CTR drop noticeably?

- If rankings stay the same or even rise slightly but CTR falls sharply (e.g. from 10% to 5%), that’s a dangerous “decoupling” signal: your title tags or meta descriptions aren’t enticing users to click (maybe don’t meet expectations), or users remember the old page’s value but don’t want to click the new version!

- Worsening Core User Experience Metrics:

- LCP (Largest Contentful Paint): Main images/text load too slowly (Google recommends LCP < 2.5s).

- FID / INP (Interaction to Next Paint): Clicking buttons or links feels laggy (Google suggests INP < 200ms).

- CLS (Cumulative Layout Shift): Page elements (like images, ads) jump or shift unexpectedly while browsing (Google suggests CLS < 0.1).

- If these metrics get worse after update—especially INP and CLS—it seriously hurts user experience, causing short visits and high bounce rates. Google stats show pages with poor CLS see an average 15% drop in conversion rate.

How to Accurately Catch “User Dissatisfaction”?

- GA4 is your main battlefield:

- Page Reports Comparison: Focus on your updated pages, set time ranges in GA4 (1 month before vs 1 month after update), and compare key metrics:

Views: Any clear changes in organic search traffic?Views per user: Are users just viewing that page then leaving?Average engagement time: Is time on page longer or shorter?Event count/Conversions: Are user interactions (clicks, scrolls) or conversions down?

- Exploration (Explore) feature for deep dives: Create custom reports, segment traffic sources (choose “Session default channel group” = “Organic Search”), analyze user behavior on specific pages. Use comparison to clearly see changes.

- Page Reports Comparison: Focus on your updated pages, set time ranges in GA4 (1 month before vs 1 month after update), and compare key metrics:

- Google Search Console Performance Report is essential:

- Keep a close eye on the page’s CTR and average position changes (make sure the date range covers at least a month before and after the update).

- Check performance by different core search queries: Are CTR drops happening on specific query intents or across the board? This helps pinpoint what content areas went off track.

- PageSpeed Insights / Web Vitals Report: Regularly test the updated page to track scores and diagnostic advice for LCP, INP, and CLS. Make it clear whether any technical experience issues (speed, stability) have worsened and driven users away.

Internal Link Structure Errors

The Value of Internal Links:

- Traffic Guidance & Crawl Paths: Internal links act as the main roadmap guiding users and Google crawlers around your site. Removing lots of important internal links to a page can isolate it, reducing internal link equity and lowering Google’s perceived importance of that page.

- Topic Relevance Signals: The anchor text of internal links pointing to a page (like “Detailed Guide on Google SEO Ranking Factors 2024”) sends key signals to Google about the page’s core topic. If you update and replace clear, relevant anchor texts with vague or unrelated ones (like “Click here for details”), you weaken that signal.

Common Pitfalls When Updating:

- Accidentally Deleting/Changing Key Entry Links: When updating navigation menus, breadcrumbs, homepage promos, related posts sections, or contextual links in content, you might remove or alter links pointing to your updated page.

- Incorrect Anchor Text Changes: For the sake of “diversity,” replacing strongly relevant anchor texts with unrelated or weakly related descriptions.

- New Content Versions Breaking Related Entries: For example, after reorganizing content, the automatically generated related posts widget might no longer include links to that page.

How to Diagnose Internal Link Damage?

- Put Site Crawling Tools to Work:

- Use Screaming Frog SEO Spider (free version is fine) or Sitebulb to crawl your site.

- Find your updated page URL: Locate it in the crawl results.

- Check the “Inlinks” report: This clearly shows how many internal pages link to this page, where each link comes from, and what anchor text is used.

- Compare before and after (if you have past crawl data): See if the number of internal links and main source pages (especially high-value ones like homepage or category pages) have dropped, or if key anchor texts have been weakened.

- Manually Check Key Nodes: Make sure your homepage, main category pages, and important pillar pages still have clear, quality internal links pointing to your updated page. Losing a featured box on the homepage can be a big hit.

- Review the Updated Page’s Own Content: Does the new content actively and properly link out to other high-value pages on your site? Rich, relevant outbound internal links show depth and integration, which helps ranking.

Google’s final vote always lies with the search users.

A temporary ranking drop is actually important feedback from both the algorithm and users.